Generative AI vs. Teachers: insights from a

literature review

IA generativa versus profesores:

reflexiones desde una revisión de la literatura

Dr. Andres Chiappe. Profesor

Titular. Universidad de la Sabana. Colombia

Dr. Andres Chiappe. Profesor

Titular. Universidad de la Sabana. Colombia

Dña. Carolina

Sanmiguel. Profesora. Fundación Universitaria

Navarra. Colombia

Dña. Carolina

Sanmiguel. Profesora. Fundación Universitaria

Navarra. Colombia

Dra. Fabiola Mabel

Sáez Delgado. Profesora. Universidad Católica de la

Santísima Concepción. Chile

Dra. Fabiola Mabel

Sáez Delgado. Profesora. Universidad Católica de la

Santísima Concepción. Chile

Received: 2024/07/08 Reviewed 2024/05/28 Accepted: :2024/12/06 Online First: 2024/12/14 Published: 2025/01/07

Cómo citar

este artículo:

Chiappe,

A., Sanmiguel, C., & Sáez Delgado, F. M. (2025). IA generativa versus

profesores: reflexiones desde una revisión de la literatura [Generative AI vs. Teachers: insights from a literature review]. Pixel-Bit. Revista De

Medios Y Educación, 72, 119–137. https://doi.org/10.12795/pixelbit.107046

ABSTRACT

The growing integration of

artificial intelligence in universities is reshaping higher education,

particularly through the use of chatbots and generative language models. This article conducts a

literature review, applying PRISMA guidelines to 155 peer-reviewed articles, to

examine the advantages, limitations, and pedagogical applications of AI

compared to human teaching. Three main scenarios of impact on educational

practices were identified: a) Loss of certain traditional aspects of teaching,

such as exclusive information transmission and reporting tasks, b)

Transformation of roles, including control over educational content and the

didactic contract, c) Emergence of new elements, such as personalized learning

and innovative evaluation approaches. Despite its potential to automate

processes and save time, chatbots cannot replicate essential human qualities

like empathy and adaptability. Therefore, their optimal integration requires

thorough pedagogical analysis to balance innovation with educational

effectiveness. This work is valuable for researchers, educators, and

instructional designers seeking to understand how to leverage AI without

compromising teaching quality. It represents a crucial step toward the

development of AI integration strategies grounded in solid pedagogical principles.

RESUMEN

La creciente integración

educativa de la inteligencia artificial está reconfigurando la educación

superior, especialmente a través del uso de chatbots y

modelos de lenguaje generativo. Este artículo realiza una revisión de la

literatura, aplicando las directrices PRISMA a 155 artículos revisados por

pares, para examinar las ventajas, limitaciones y aplicaciones pedagógicas de

la IA en comparación con la enseñanza humana. Se identificaron tres principales

escenarios de impacto en las prácticas educativas: a) Pérdida de ciertos

aspectos tradicionales de la enseñanza, como la transmisión exclusiva de

información y tareas de reporte, b) Transformación de roles, incluyendo el

control sobre contenidos educativos y el contrato didáctico, c) Emergencia de

nuevos elementos, como la personalización del aprendizaje y enfoques

innovadores en la evaluación. A pesar de

su potencial para automatizar procesos y ahorrar tiempo, los chatbots no replican cualidades humanas esenciales como la

empatía y la adaptabilidad. Por ello, su integración óptima requiere análisis

pedagógicos profundos que equilibren innovación y efectividad educativa. Este

trabajo es valioso para investigadores, docentes y diseñadores educativos

interesados en entender cómo aprovechar la IA sin comprometer la calidad de la

enseñanza. Representa un paso crucial hacia estrategias de incorporación de IA

basadas en principios pedagógicos sólidos.

KEYWORDS· PALABRAS CLAVES

Generative Artificial Intelligence; Teacher Practices;

Educational Innovation; Higher Education; Pedagogical Transformation; Chatbot

Applications in Education.

Inteligencia Artificial Generativa; Prácticas

Docentes; Innovación Educativa; Educación Superior; Transformación Pedagógica;

Aplicaciones de Chatbots en Educación.

1. Introduction

In recent times it has become more and more common or frequent

to hear about pilot implementation experiences of chatbots in education, as

part of a growing and increasingly complex trend of incorporating digital

technologies to support teaching and learning (Chen et al., 2023;

Tlili et al., 2023).

In this regard, Salvagno et al. (2023), mention that

chatbots are programs capable of generating a specific conversation with

people, through natural language processing. Chatbots, which can link text as

well as voice, can recognize expressions, understand perspectives, and offer

insights from ongoing feeding or training processes based on their users'

responses and interactions. In other words, chatbots are considered a software

tool that allows interaction with users regarding a certain topic or also on a

specific domain in a natural and conversational way through text and voice (Smutny &

Schreiberova, 2020). They have been used

for many different purposes, in a wide range of domains, and education has not

been the exception.

The few and most recent investigations in this regard

and the information available in the press and academic networks indicate that

there is a lot of confusion and fear regarding the use of these digital tools,

mainly related to plagiarism (King, 2023) and, in general, to

the loss of relevance of many of the learning and evaluation activities that

have traditionally been provided to students (Surahman & Wang,

2022).

In this scenario, it is vitally important to offer a

reflective approach from a pedagogical perspective on this matter, so that it

is useful for researchers and educators, and thus identify its possibilities

and main risks for its proper implementation in the framework of higher

education. The path to understanding, at least in an incipient way, the

potential and risks of using chatbots in education, it seems that almost

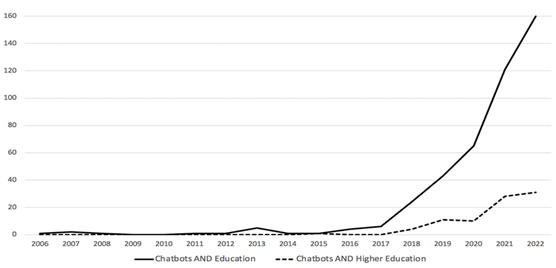

everything is still to be done, according to what is indicated in Figure 1,

where the research panorama is shown in this subject, published in

peer-reviewed journals indexed in Scopus.

Figure 1

Published articles on

“chatbots AND education” in peer-reviewed journals indexed in Scopus

Figure 1 highlights that investigative interest in the

use of chatbots in education has grown exponentially over the past 5 years.

However, the number of articles published per year is still relatively low,

with an average of just over 100 articles per year in the last four years.

These findings suggest that there is still a great deal of research to be

conducted in this area, despite the increasing interest.

Enthusiasts of technological advancements believe

artificial intelligence (AI) is a permanent fixture in our society, supported

by research findings and its current growth and presence in various aspects of

human life (S. Lee et al., 2022). The majority of AI

initiatives aim to achieve permanent improvement, thus increasing expectations

for its continued use. The integration of intelligent algorithms has

revolutionized digital technologies in our daily lives, particularly through

automated problem-solving processes (Raphael, 2022) and personalized

digital services (Maksimova et al.,

2021).

However, AI also raises concerns such as privacy (Hu & Min, 2023), information

security (S. Lee et al., 2020), bias and the reliability

of decision-making systems (Qiu et al., 2022;

Sun et al., 2022), issues discussed

from different critical perspectives. Among the recent AI developments are

chatbots, also known as conversational robots, agents, or personalized

assistants, which interact and "talk through text" with human users.

They have been used mainly in customer service systems (Antonio et al.,

2022), personal and home

assistance, e-commerce, marketing and business management (Reis et al., 2022), transportation and

logistics (Aksyonov et al.,

2021) and

citizen-government interaction.

Chatbots are based on natural language models, which

assimilate human language structure, identify patterns, make predictions, and

generate conversational responses through training with large data amounts and

algorithms (C.-C. Lin et al.,

2023). There are two

types: "open" or general, available to the public and answering

various topics; and "closed" or specific, designed for particular

fields like customer service or patient care (Wilson &

Marasoiu, 2022). Their creation

requires substantial information to answer diverse user questions and constant

updating and training to keep responses relevant, involving significant time

and cost (Al-Tuama &

Nasrawi, 2022).

In education, chatbot use is emerging and generating

interest though academic publications are minimal due to novelty (Bailey &

Almusharraf, 2021). Initial literature

shows positive expectations, focusing on identifying AI developments'

intentionality and application in university courses as virtual assistants or

tutors, supporting mass or self-directed learning models (Hsu & Huang,

2022), or mediating

students' emotional regulation (Benke et al., 2020). While some skeptics

exist (Winkler &

Söllner, 2018), recent reviews have

examined chatbots for Facebook Messenger as learning support (Smutny &

Schreiberova, 2020), attempts to use

chatbots in education (Kuhail et al., 2023), generative AI

research trends in educational praxis (Bozkurt, 2023), chatbot use trends

in educational contexts (Hwang & Chang,

2023), and benefits,

opportunities, challenges, and perspectives of AI chatbots in education (Labadze et al.,

2023). However, a specific

review complementing these objectives is required to further explore the

potential benefits and suitability of natural language model advancements for

higher education.

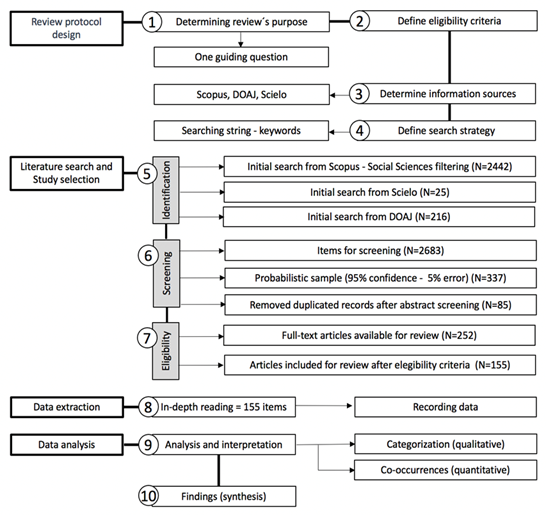

2. Methodology

According to Carrera-Rivera (2022), conducting a

literature review facilitates the identification of specific ideas or patterns

of ideas that contribute to understanding extensive information. In this study,

the literature review process followed the phases mentioned by said researcher,

and articulated with the guidelines of PRISMA method, as illustrated in Figure

2.

Figure 1

Review method design

2.1 Review protocol design

The initial stage of the literature review involved

determining its purpose, which aimed to identify the key transformations in

teaching practices resulting from the increased utilization of chatbots and

other developments in artificial intelligence. To guide this review, a research

question was formulated: "What are the effects of chatbot implementation

on teacher practice?" Following the research question, the next step

involved selecting appropriate sources of information.

Scopus, a comprehensive journal database known for its

rigorous review and editorial processes, was chosen due to its broad coverage

and diverse range of journals, taking into account Scielo and DOAJ as

complementary databases. According to Pranckutė (2021), these databases

have high academic and scientific recognition due to the rigor of their blind

peer review processes and have very strict editorial policies, ensuring good

quality of the sources to be reviewed. On the other hand, this set of databases

provides broad thematic coverage and a high number of high-impact journals to

work with. Finally, especially Scopus, offers reviewers a set of data analysis

tools that are very useful in the initial stages of the review.

To address the review question, a keyword string was

applied in Scopus, comprising the following terms:

TITLE-ABS-KEY ("teacher practice" OR "teaching

practice" OR

teaching) AND (chatbots OR "artificial

intelligence") AND (LIMIT TO SUBJAREA,“SOCI“).

2.2 Literature search and

study selection

In this phase, three characteristic processes of the

PRISMA method were applied: identification, screening, and eligibility.

The initial search yielded a total of 2683 documents

after social science filtering (Scopus=2442, Scielo=25, and DOAJ=216). To

ensure a suitable sample for further analysis, a probabilistic representative

sample of 337 documents was calculated, with a 95% confidence level and a 5%

margin of error.

For the calculation of this sample S, the following

formula was applied, where N = the size of the initial set of documents, e =

the margin of error, and z = z score, which is defined as the number of

standard deviations that a given proportion deviates from the mean.

S=

Finally, 85 duplicated articles (repeated in the

databases) were eliminated.

As part of the eligibility step, an abstracting process

was conducted, in which the following inclusion/exclusion criteria were applied

to ensure the relevance and quality of the included studies. (1) they directly

addressed the use of chatbots or artificial intelligence in educational

contexts from a pedagogical perspective; and (2) they presented empirical data

supporting the reported findings. Additionally, articles had to be published in

peer-reviewed journals indexed between 2015 and 2023 and written in English or

Spanish. As exclusion criteria, duplicate studies, theoretical reviews without

empirical data, and works that did not offer clear contributions to the

review’s objective were discarded. These criteria ensured a pertinent,

up-to-date, and methodologically sound research corpus. The documents that met

these criteria comprised the set of documents subjected to in-depth reading

(n=155).

To ensure the rigor of this review, a systematic

evaluation of the quality of the included studies was conducted. Each article

was assessed based on thematic relevance, applied methodology, and the

robustness of the reported findings. The evaluation was focused on parameters

such as clarity of objectives, validity of methods, reliability of data

collection and analysis, and well-supported conclusions. This evaluation

allowed prioritization of studies that provided significant and well-documented

contributions to analyzing the effects of chatbots in education.

2.3 Data Extraction and

Analysis

The data extraction phase involved meticulously

reading each selected article and recording relevant information in a

documentation matrix, where the data was systematically analyzed. The data

analysis followed a mixed approach combining qualitative (grouping and

categorization) and quantitative techniques (analysis of frequencies or

co-occurrences). Initially, open coding was applied to identify emerging

concepts and patterns, which were then organized into main thematic categories

through inductive analysis. Subsequently, axial coding was employed to

establish relationships between categories, enabling a deeper understanding of

the studied phenomena.

The analysis of co-occurrences involved examining how

often specific themes or keywords appeared together within the same article or

section. A co-occurrence matrix was created to quantify and visualize the

relationships between different concepts. For instance, themes such as

"pedagogical transformation, "personalized learning," and

"student engagement" were frequently linked, indicating a strong

interrelation in the context of AI applications in education. This step was

facilitated by using specialized software for text analysis, ensuring precision

and consistency. Finally, the results of the frequency and co-occurrence

analysis were synthesized into a visual representation, such as heatmaps or

network diagrams, to highlight the most significant connections and patterns.

The final phase of the review encompassed

synthesizing, interpreting, and compiling the results into a coherent text. The

findings were structured according to the IMRaD (Introduction, Methods,

Results, and Discussion) format, facilitating a comprehensive understanding of

the research outcomes. In this stage, both qualitative and quantitative

analyses were performed, ensuring a rigorous examination of the collected data.

The researchers meticulously analyzed the data for accuracy and relevance,

extracting key insights and trends. Subsequently, the synthesized findings were

interpreted to provide a deeper understanding of the research subject. Finally,

the researchers organized and compiled the results into a cohesive text,

presenting the methodology, results, and subsequent discussions systematically

and logically.

3. Analysis and

results

3.1 Main effects of chatbot

implementation on teacher work

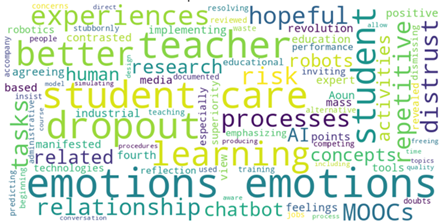

The Figure 3 provides a visual representation of the

key themes and concepts emerging from the analysis of the integration of

artificial intelligence and chatbots in education.

Figure 3

Key themes and concepts

related to results

One of the first issues identified in the literature

regarding AI-based tools is the emotional response to their implementation.

While 35.6% of studies express a hopeful and positive outlook on chatbots in

education, 28.2% reflect feelings of risk and distrust, often echoed in the

media. Aoun (2017) highlights that AI

and robotics have outperformed humans in specific tasks, prompting reflection

on roles where humans excel, such as fostering creativity and adaptability, and

discouraging outdated training practices. This perspective is supported by López

Regalado et al. (2024) and Villegas-José and Delgado-García (2024).

As documented in 67.3% of reviewed studies, chatbots

are increasingly used in education for tasks such as administrative support and

dropout prediction. They also assist teaching by addressing student doubts and

simplifying complex topics (K.-C. Lin et al.,

2023). Moreover, 28.7% of

articles emphasize that automating repetitive tasks for teachers can improve

teaching quality by freeing time for course design and personalized feedback (Su & Yang, 2023). Chatbots also

encourage student participation by providing a pressure-free environment for

inquiries.

In massive education models like MOOCs, chatbots play

a complementary role, simulating teacher-student interactions otherwise limited

by scale. Although only 7.4% of studies explore chatbots in MOOCs, their

relevance in digitally mediated learning is notable, as noted by Li (2022) and Bachiri and

Mouncif (2023). These findings underscore the dual potential and limitations of chatbots

in education, requiring further exploration.

3.2 Disruption-related results

According to Aoun (2017), from time to time

technological developments appear on the human scene with sufficient

capabilities to radically transform life in all its dimensions. It happened

with industrialization and mechanization coming from steam technology, with

electricity, with the Internet, and now, with robotics and artificial

intelligence. In this regard, those who have followed up on these phenomena

agree that the arrival of these technologies, in terms of work and professional

spaces, always means that some are lost, and some are transformed or emerge (Mesquita et al.,

2021).

Such reflection, taken to the subject that has been

exposed in this text, puts us in a position to ask ourselves: Because of

artificial intelligence... What issues of a teacher will be lost? What should

be transformed? What new roles should the teacher assume? In other words, what

would a teacher do better than a robot or an artificial intelligence system?

Therefore, throughout some of the results of this

review, we want to address possible answers to these questions, which become

essential for teachers´ relevance within an educational system that is taking

increasingly decisive steps toward the structural incorporation of

transformative technologies such as artificial intelligence. From this point of

view, we have organized the following results.

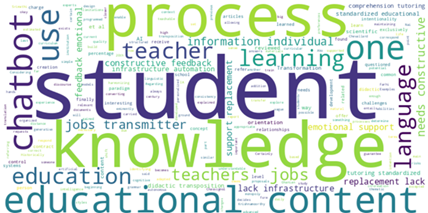

The Figure 4 provides a visual representation of the

disruption-related results.

Figure 4

Disruption-related results

3.2.1 About what

teachers will miss out on due to chatbots

By acknowledging that the scope of pedagogy

encompasses education in its entirety, it becomes evident that many of the

challenges commonly encountered in educational practices are likely to be

impacted by the emergence of robust digital technologies such as Artificial

Intelligence. Consequently, a pedagogical perspective must be employed to

analyze and understand these changes in a natural and composed manner. This

will aid in the adaptation to new discourses and professional practices of

teachers. Here are some issues found in the literature about that:

Loss #1: The

teacher's role as a transmitter of information.

Since the mid-1990s, concerns have emerged about

digital technologies threatening teachers' jobs. Literature (72.8%) highlights

the growing role of AI in education, providing students access to vast

information in diverse formats and fueling tensions between teachers and

chatbots (Malik et al., 2021;

Safadel et al., 2023). However, the idea

that chatbots will eliminate teachers' roles as information transmitters is

debated.

Chatbots currently lack the ability to recognize

individual student characteristics, limiting their capacity to adapt to diverse

learning needs. In contrast, teachers excel at personalizing instruction,

offering feedback, and providing emotional support—roles that AI cannot fully

replicate (Meng & Dai,

2021).These human-centered

elements remain central to effective education.

Nonetheless, chatbots could replace the informational

role of some teachers by providing precise and readily accessible content. This

is more likely in contexts where teaching focuses solely on delivering

information. However, in regions with limited digital infrastructure, teachers

remain essential as content transmitters. This underscores that while AI can

supplement education, its impact is shaped by context, infrastructure, and

teaching approaches. The relationship between AI and educators should focus on

complementarity rather than replacement, ensuring that human-centric teaching

continues to enrich educational experiences.

Loss #2: Homework exclusively

related to reporting data or information.

Chatbots provide students with immediate access to

information and answers, eliminating the need to spend hours searching across

various sources. As noted in 17.4% of reviewed articles, this capability allows

students to quickly obtain necessary information through text chats,

streamlining tasks that previously relied on extensive data gathering.

Consequently, assignments focused on reporting information have become less

relevant, enabling both students and teachers to focus more on tasks that

involve analyzing and understanding the acquired information, as noted by Fidan

and Gencel (2022) and Malik et al. (2021).

This shift necessitates a transformation in the design

of homework and educational activities. Assignments should aim to strengthen

students' abilities rather than diminish their learning opportunities due to

over-reliance on chatbots. Moreover, higher education institutions should

consider adopting tools for similarity verification and detecting

machine-generated writing. This would introduce scenarios where artificial

intelligence is used to identify AI-generated content.

However, the emphasis in evaluation should shift away

from the production of text itself. Instead, the focus must be on students'

ability to comprehend, analyze, and engage with the text. This ensures that

educational assessments prioritize critical thinking and understanding over

rote production, aligning learning objectives with the evolving use of AI

technologies in education.

Loss #3: Evaluation

for all equally based solely on memory.

In consideration of the above, a third issue was

extracted from the literature reviewed (8,2%) that focused on the assessment of

learning. So, when a student relies on chatbots to report information, the

evaluation mechanisms focused on said processes would no longer make sense. For

this reason, in the evaluation framework, it will be important to resort to

other ways of assessing learning results, such as discussions, debates,

projects, portfolios, or practices that, in addition to allowing verification

of the authenticity of the student's intellectual production against the

possibility of using chatbots, allow the teacher to identify their performance

directly. Some research that addressed these topics are Ledwos et al. (2022) and Chou (2023).

This is nothing more than the claim of formative

assessment over the summative so that through it the various possibilities of

AI are used as part of learning assessment activities.

On the other hand, involving chatbots and other

developments based on artificial intelligence in the evaluation of learning

could lead to the implementation of evaluation processes where different

evaluation methods and instruments are applied to different students. Perhaps

we are at the beginning of the fall of the homogenized and standardized

evaluation.

3.2.2 About transformations that will affect

teachers due to chatbots

Some of the issues that will tend to be transformed

due to the progressive use of chatbots in education are related to what

Zambrano (2005) points out about

Pedagogy, in terms of conceiving it as a discourse on relationships between

teachers, students, the school and social environment and the forms of the

orientation of knowledge that take place.

Transformation # 1:

About control over the intentionality and orientation of educational content.

Considering the above, a few percentage of the

articles reviewed (5,8%), report that the use of chatbots in education has to

do with the transfer of the monopoly of control that teachers and the school

institution have had so far over the intentionality and orientation of the

students' learning content. Historically, students receive during their school

life a set of structured knowledge in the form of curricular proposals, which

someone has estimated correspond to what should be learned. So, with what intention

has the curriculum been organized like this? Is it okay for one vague person to

determine what another person should learn? Who decides this? Certainly not the

student. This is something that has not been questioned enough and that is

accepted as part of the current paradigm of education and that, due to the use

of artificial intelligence developments in education, is beginning to be

questioned. Some of the above can be found in Farhi et al. (2022) or Chassignol et al.

(2018).

In this sense, the chatbot can offer a personalized

learning experience, adapted to the needs and preferences of the student,

allowing them to explore and build their knowledge in a more autonomous way (Srimathi &

Krishnamoorthy, 2019). However, this

paradigm shift also entails certain challenges and risks, one of the main ones

being maintaining a high level of quality and consistency in content and

learning orientation, since the chatbot cannot always guarantee that students

receive the correct and relevant information.

Transformation # 2:

Who will be in charge of the didactic transposition?

A small percentage (4.2%) of reviewed documents

address educational content creation processes, specifically focusing on

didactic transposition. This concept, developed in the 20th century, describes

the transformation of scientific knowledge into teachable material and

ultimately into knowledge that students can understand and learn (Chevallard, 1998). This

"translation" process ensures content aligns with students' cognitive

development, language, and prior knowledge, traditionally managed by teachers

or subject-matter experts.

Generative AI is now playing a role in didactic

transposition, as natural language models are designed not only to provide

answers but also to simplify and explain scientific knowledge in accessible

terms. This linguistic capability positions AI as a valuable tool in

harmonizing complex concepts with everyday language.

Moreover, AI systems can be trained to identify

individual learning styles, limitations, and abilities, allowing the

transposition process to cater more closely to each student's needs. This

enables a more personalized approach to learning, complementing teachers’ roles

in content adaptation. By supporting these processes, AI has the potential to

enhance educational content delivery, ensuring accessibility and relevance.

Examples of such AI applications in content creation are discussed by Ohanian (2019) and Ako-Nai et al. (2022), demonstrating its

growing influence in educational innovation.

Transformation# 3:

The didactic contract.

Finally, the last few of the articles reviewed (3,7%)

refer to potential changes in teacher-student relationships. Regarding this, in

the context of the use of chatbots in education, the "didactic

contract" becomes an important concept related to such relationships, with

big and complex challenges ahead.

Didactic Contract refers to the tacit agreement

between the teacher and the student about what is expected to happen in the

classroom and how learning will take place. This contract establishes the rules

and expectations for learning and can influence how chatbots are used in the

classroom (Caldeborg et al.,

2019).

In the context of the use of chatbots in education, the

didactic contract can be challenged by the introduction of new technological

tools. For example, students may expect a more personalized interaction with

the chatbot, which may require the teacher to adapt their teaching approach and

strategies to meet those needs. Research related to changes in classroom

relationships can be found in Garito (1991) or Lo et al. (2021).

4. Discussion and

Conclusions

The deployment of AI chatbots in educational settings

presents a multifaceted issue that demands profound pedagogical examination.

The use of chatbots and AI tools in education introduces significant changes in

pedagogical practices. Chatbots can automate repetitive tasks, such as

answering common questions, allowing teachers to focus on higher-value

activities like lesson design and personalized student support. This shift can

foster active learning and collaboration in the classroom. However, these tools

require teachers to adapt their roles, acting as facilitators and mediators of

responsible technology use. Chatbots promote self-directed learning but demand

critical skills to evaluate information. Additionally, assessments must

emphasize critical thinking and creativity rather than memory-based tasks. In

this regard, the teacher-student relationship remains crucial. While chatbots

personalize learning, human interaction fosters empathy, motivation, and

emotional support. Effective AI integration must align with pedagogical

principles that prioritize holistic student development.

AI-driven chatbots hold significant promise in

automating teaching tasks, offering efficiencies and accessibility previously

unattainable. Nevertheless, they cannot fully replicate the unique qualities of

human interaction essential to education, such as empathy, emotional

intelligence, adaptability, and the ability to inspire and motivate learners.

Indeed, these deeply human attributes transcend mere information transmission

and often resist replication by even the most advanced algorithms.

Therefore, integrating AI chatbots into education

requires a critical assessment of their strengths and limitations from a

pedagogical perspective. For instance, research should identify areas where

chatbots excel, such as automating repetitive tasks, while highlighting their

shortcomings, particularly in fostering meaningful human connections. By doing

so, educators can leverage chatbots in tasks where automation is beneficial,

freeing instructional time for activities that demand the irreplaceable human touch.

In this context, the interaction between generative

chatbots and teachers represents a dynamic relationship where both must

complement each other's strengths to create an effective educational system.

Consequently, future studies should examine chatbot-student interaction designs

and explore the impact of chatbot personality and location on learning outcomes

and satisfaction. Furthermore, the rapid evolution of AI in education

necessitates mechanisms to maximize its potential while addressing challenges

such as emotional intelligence and ethical use.

As tools like ChatGPT gain prominence, it becomes

evident that guidelines for their responsible adoption are critical (Tlili et al., 2023). Thus, collaboration between educators,

instructional designers, researchers, and AI developers is essential to

establish pedagogical principles that balance technological innovation with the

preservation of human elements. Ultimately, by achieving this balance, emerging

technologies can promote improved learning experiences and vital life skills,

such as self-regulation, ensuring that AI complements rather than replaces the

invaluable role of human educators (Bozkurt, 2023).

4.1 Limitations and

Recommendations

This review, while comprehensive, has limitations.

Most studies analyzed come from specific, well-resourced educational contexts,

limiting generalization to environments with fewer technological resources or

differing cultural attitudes toward AI. Besides, the focus on recent studies

reflects an evolving landscape, but the long-term impacts of chatbots remain

underexplored. Additionally, methodological inconsistencies across studies make

direct comparisons challenging. Finally, while frequency and co-occurrence

analysis identified key trends, it may overlook deeper nuances. Future research

should include qualitative methods, such as case studies, to better understand

the contextual and subjective effects of chatbots on education.

On the other hand, to optimize the integration of

chatbots in education, institutions should adopt a balanced approach that

combines technological innovation with robust pedagogical principles. Teachers

should receive training on effectively leveraging chatbots to complement, not

replace, their instructional practices. Curricula must be updated to emphasize

critical thinking, creativity, and digital literacy, enabling students to

navigate AI-enhanced learning environments responsibly. Developers should collaborate

with educators to design chatbots tailored to diverse educational contexts,

ensuring inclusivity and adaptability. Additionally, further research is needed

to explore long-term impacts, particularly on student engagement and

teacher-student dynamics, while addressing ethical concerns such as data

privacy and bias.

Author´s Contribution

All the authors participated equally in the following

processes according to the CRediT Taxonomy: Conceptualization, data curation

and formal analysis, research and methodological design, writing of the

original draft and its final review and editing. In addition, Andrés Chiappe is

the corresponding author.

Acknowledgments

We thank the Universidad de La Sabana (Group

Technologies for Academia – Proventus (Project EDUPHD-20-2022), Fundación

Universitaria Navarra - Uninavarra and Universidad Católica de la Santísima

Concepción, for the support received in the preparation of this article.

References

Ako-Nai, F., De La Cal Marin,

E., & Tan, Q. (2022). Artificial Intelligence Decision and Validation

Powered Smart Contract for Open Learning Content Creation. In J. Prieto, A.

Partida, P. Leitão, & A. Pinto (Eds.), Blockchain and Applications

(Vol. 320, pp. 359–362). Springer International Publishing.

https://doi.org/10.1007/978-3-030-86162-9_37

Aksyonov, K. A., Ziomkovskaya,

P. E., Danwitch, D., Aksyonova,

O. P., & Aksyonova, E. K. (2021). Development of

a text analysis agent for a logistics company’s Q&A system. Journal of

Physics: Conference Series, 2134(1), 012021.

https://doi.org/10.1088/1742-6596/2134/1/012021

Al-Tuama, A. T., & Nasrawi, D. A. (2022). A Survey on the Impact of Chatbots

on Marketing Activities. 2022 13th International Conference on Computing

Communication and Networking Technologies (ICCCNT), 1–7.

https://doi.org/10.1109/ICCCNT54827.2022.9984635

Antonio, R., Tyandra, N., Nusantara, L. T., Anderies, &

Agung Santoso Gunawan, A. (2022). Study Literature

Review: Discovering the Effect of Chatbot Implementation in E-commerce Customer

Service System Towards Customer Satisfaction. 2022 International Seminar on

Application for Technology of Information and Communication (iSemantic), 296–301.

https://doi.org/10.1109/iSemantic55962.2022.9920434

Aoun, J. E. (2017). Robot-Proof. The MIT Press.

https://mitpress.mit.edu/books/robot-proof

Bachiri, Y.-A., & Mouncif,

H. (2023). Artificial Intelligence System in Aid of Pedagogical Engineering for

Knowledge Assessment on MOOC Platforms: Open EdX and Moodle. International

Journal of Emerging Technologies in Learning (iJET),

18(05), 144–160. https://doi.org/10.3991/ijet.v18i05.36589

Bailey, D., & Almusharraf,

N. (2021). Investigating the Effect of Chatbot-to-User Questions and Directives

on Student Participation. 2021 1st International Conference on Artificial Intelligence

and Data Analytics (CAIDA), 85–90.

https://doi.org/10.1109/CAIDA51941.2021.9425208

Benke, I., Knierim, M. T., & Maedche, A. (2020).

Chatbot-based Emotion Management for Distributed Teams: A Participatory Design

Study. Proceedings of the ACM on Human-Computer Interaction, 4(CSCW2),

1–30. https://doi.org/10.1145/3415189

Bozkurt, A. (2023). Unleashing the Potential of

Generative AI, Conversational Agents and Chatbots in Educational Praxis: A

Systematic Review and Bibliometric Analysis of GenAI in Education. Open

Praxis, 15(4), 261–270. https://doi.org/10.55982/openpraxis.15.4.609

Caldeborg, A., Maivorsdotter, N., & Öhman, M. (2019). Touching the

didactic contract—A student perspective on intergenerational touch in PE. Sport,

Education and Society, 24(3), 256–268.

https://doi.org/10.1080/13573322.2017.1346600

Carrera-Rivera, A., Ochoa, W., Larrinaga, F., &

Lasa, G. (2022). How-to conduct a systematic literature review: A quick guide

for computer science research. MethodsX, 9,

101895. https://doi.org/10.1016/j.mex.2022.101895

Chassignol, M., Khoroshavin, A., Klimova, A., & Bilyatdinova,

A. (2018). Artificial Intelligence trends in education: A narrative overview. Procedia

Computer Science, 136, 16–24.

https://doi.org/10.1016/j.procs.2018.08.233

Chen, Y., Jensen, S., Albert, L. J., Gupta, S., &

Lee, T. (2023). Artificial Intelligence (AI) Student Assistants in the

Classroom: Designing Chatbots to Support Student Success. Information Systems Frontiers, 25(1),

161–182. https://doi.org/10.1007/s10796-022-10291-4

Chevallard, Y. (1998). La transposición didáctica

(Tercera Edición, Vol. 1). Aique.

Chou, T.-N. (2023). Apply an Integrated Responsible AI

Framework to Sustain the Assessment of Learning Effectiveness: Proceedings

of the 15th International Conference on Computer Supported Education,

142–149. https://doi.org/10.5220/0012058400003470

Farhi, F., Jeljeli, R.,

& Hamdi, M. E. (2022). How do Students Perceive Artificial Intelligence in

YouTube Educational Videos Selection? A Case Study of Al Ain City. International

Journal of Emerging Technologies in Learning (iJET),

17(22), 61–82. https://doi.org/10.3991/ijet.v17i22.33447

Fidan, M., & Gencel, N.

(2022). Supporting the Instructional Videos With Chatbot and Peer Feedback Mechanisms in Online

Learning: The Effects on Learning Performance and Intrinsic Motivation. Journal

of Educational Computing Research, 60(7), 1716–1741.

https://doi.org/10.1177/07356331221077901

Garito, M. A. (1991). Artificial intelligence in

education: Evolution of the teaching?learning

relationship. British Journal of Educational Technology, 22(1),

41–47. https://doi.org/10.1111/j.1467-8535.1991.tb00050.x

Hsu, H.-H., & Huang, N.-F. (2022). Xiao-Shih: A

Self-Enriched Question Answering Bot With Machine Learning

on Chinese-Based MOOCs. IEEE Transactions on Learning Technologies, 15(2),

223–237. https://doi.org/10.1109/TLT.2022.3162572

Hu, Y., & Min, H. (Kelly). (2023). The dark side

of artificial intelligence in service: The “watching-eye”

effect and privacy concerns. International Journal of Hospitality Management,

110, 103437. https://doi.org/10.1016/j.ijhm.2023.103437

Hwang, G.-J., & Chang, C.-Y. (2023). A review of

opportunities and challenges of chatbots in education. Interactive Learning

Environments, 31(7), 4099–4112.

https://doi.org/10.1080/10494820.2021.1952615

King, M. R. (2023). A Conversation on Artificial

Intelligence, Chatbots, and Plagiarism in Higher Education. Cellular and

Molecular Bioengineering, 16(1), 1–2.

https://doi.org/10.1007/s12195-022-00754-8

Kuhail, M. A., Alturki, N., Alramlawi, S., & Alhejori, K.

(2023). Interacting with educational chatbots: A systematic review. Education

and Information Technologies, 28(1), 973–1018.

https://doi.org/10.1007/s10639-022-11177-3

Labadze, L., Grigolia, M., & Machaidze, L.

(2023). Role of AI chatbots in education: Systematic

literature review. International Journal of Educational Technology in Higher

Education, 20(1), 56. https://doi.org/10.1186/s41239-023-00426-1

Ledwos, N., Mirchi, N., Yilmaz, R., Winkler-Schwartz,

A., Sawni, A., Fazlollahi,

A. M., Bissonnette, V., Bajunaid, K., Sabbagh, A. J.,

& Del Maestro, R. F. (2022). Assessment of learning curves on a simulated

neurosurgical task using metrics selected by artificial intelligence. Journal

of Neurosurgery, 137(4), 1160–1171.

https://doi.org/10.3171/2021.12.JNS211563

Lee, S., Lee, M., & Lee, S. (2022). What If

Artificial Intelligence Become Completely Ambient in Our Daily Lives? Exploring

Future Human-AI Interaction through High Fidelity Illustrations. International

Journal of Human–Computer Interaction, 1–19.

https://doi.org/10.1080/10447318.2022.2080155

Lee, S., Suk, J., Ha, H. R., Song, X. X., & Deng,

Y. (2020). Consumer’s Information Privacy and Security Concerns and Use of

Intelligent Technology. In T. Ahram, W. Karwowski, A. Vergnano,

F. Leali, & R. Taiar (Eds.), Intelligent Human

Systems Integration 2020 (Vol. 1131, pp. 1184–1189). Springer International

Publishing. https://doi.org/10.1007/978-3-030-39512-4_180

Li, H. (2022). MOOC Teaching Platform System Based on

Application of Artificial Intelligence. 2022 Second International Conference

on Advanced Technologies in Intelligent Control, Environment, Computing &

Communication Engineering (ICATIECE), 1–5.

https://doi.org/10.1109/ICATIECE56365.2022.10047595

Lin, C.-C., Huang, A. Y. Q., & Yang, S. J. H.

(2023). A Review of AI-Driven Conversational Chatbots Implementation

Methodologies and Challenges (1999–2022). Sustainability, 15(5),

4012. https://doi.org/10.3390/su15054012

Lin, K.-C., Cheng, I.-L., Huang, Y.-C., Wei, C.-W.,

Chang, W.-L., Huang, C., & Chen, N.-S. (2023). The Effects of the Badminton

Teaching–Assisted System using Electromyography and Gyroscope on Learners’

Badminton Skills. IEEE Transactions on Learning Technologies, 1–10.

https://doi.org/10.1109/TLT.2023.3292215

Lo, F., Su, F., Chen, S., Qiu, J., & Du, J.

(2021). Artificial Intelligence Aided Innovation Education Based on Multiple

Intelligence. 2021 IEEE International Conference on Artificial Intelligence,

Robotics, and Communication (ICAIRC), 12–15.

https://doi.org/10.1109/ICAIRC52191.2021.9544874

López Regalado, O., Núñez-Rojas, N., Rafael López Gil,

O., & Sánchez-Rodríguez, J. (2024). El Análisis del uso de la inteligencia

artificial en la educación universitaria: Una revisión sistemática (Analysis of the

use of artificial intelligence

in university education: a systematic review). Pixel-Bit, Revista de Medios y Educación, 70,

97-122. https://doi.org/10.12795/pixelbit.106336

Maksimova, M., Solvak, M., & Krimmer, R.

(2021). Data-Driven Personalized E-Government Services: Literature

Review and Case Study. In N. Edelmann, C. Csáki, S. Hofmann, T. J. Lampoltshammer, L. Alcaide Muñoz, P. Parycek,

G. Schwabe, & E. Tambouris (Eds.), Electronic

Participation (Vol. 12849, pp. 151–165). Springer International Publishing.

https://doi.org/10.1007/978-3-030-82824-0_12

Malik, R., Shrama, A.,

Trivedi, S., & Mishra, R. (2021). Adoption of Chatbots for Learning among

University Students: Role of Perceived Convenience and Enhanced Performance. International

Journal of Emerging Technologies in Learning (iJET),

16(18), 200. https://doi.org/10.3991/ijet.v16i18.24315

Meng, J., & Dai, Y. (Nancy). (2021). Emotional

Support from AI Chatbots: Should a Supportive Partner Self-Disclose or Not? Journal

of Computer-Mediated Communication, 26(4), 207–222.

https://doi.org/10.1093/jcmc/zmab005

Mesquita, A., Oliveira, L., & Sequeira, A. S.

(2021). Did AI Kill My Job?: Impacts

of the Fourth Industrial Revolution in Administrative Job Positions in

Portugal. In J.-É. Pelet (Ed.), Advances in

Business Information Systems and Analytics (pp. 124–146). IGI Global.

https://doi.org/10.4018/978-1-7998-3756-5.ch008

Ohanian, T. (2019). How

Artificial Intelligence and Machine Learning May Eventually Change Content

Creation Methodologies. SMPTE Motion Imaging Journal, 128(1),

33–40. https://doi.org/10.5594/JMI.2018.2876781

Pranckutė, R. (2021). Web

of Science (WoS) and Scopus: The Titans of

Bibliographic Information in Today’s Academic World. Publications, 9(1),

12. https://doi.org/10.3390/publications9010012

Qiu, C., Liang, W., Yan, Z., Li, Y., & You, Y.

(2022). Research and Application of Power Grid Fault Diagnosis and Auxiliary

Decision-making System Based on Artificial Intelligence Technology. 2022 7th

International Conference on Power and Renewable Energy (ICPRE), 492–497.

https://doi.org/10.1109/ICPRE55555.2022.9960335

Raphael, M. W. (2022). Artificial intelligence and the

situational rationality of diagnosis: Human problem‐solving and the artifacts of health and medicine. Sociology

Compass, 16(11). https://doi.org/10.1111/soc4.13047

Reis, L., Maier, C., & Weitzel, T. (2022).

Chatbots in Marketing: An In-Deep Case Study Capturing Future Perspectives of

AI in Advertising. Proceedings of the Conference on Computers and People

Research, 1–8. https://doi.org/10.1145/3510606.3550204

Safadel, P., Hwang, S.

N., & Perrin, J. M. (2023). User Acceptance of a Virtual Librarian Chatbot:

An Implementation Method Using IBM Watson Natural Language Processing in

Virtual Immersive Environment. TechTrends.

https://doi.org/10.1007/s11528-023-00881-7

Salvagno, M., Taccone,

F. S., & Gerli, A. G. (2023). Can artificial

intelligence help for scientific writing? Critical

Care, 27(1), 75. https://doi.org/10.1186/s13054-023-04380-2

Smutny, P., & Schreiberova,

P. (2020). Chatbots for learning: A review of educational chatbots for the Facebook Messenger. Computers & Education, 151,

103862. https://doi.org/10.1016/j.compedu.2020.103862

Srimathi, H., & Krishnamoorthy, A. (2019).

Personalization of student support services using chatbot. International

Journal of Scientific and Technology Research, 8(9), 1744–1747.

Scopus.

Su, J., & Yang, W. (2023). Unlocking the Power of

ChatGPT: A Framework for Applying Generative AI in Education. ECNU Review of

Education, 6(3), 355–366. https://doi.org/10.1177/20965311231168423

Sun, J., Zhang, X., & Ding, Y. (2022). Research on

trustworthiness assessment technology of intelligent decision-making system. In

S. Yang & G. Wu (Eds.), Third International Conference on Artificial

Intelligence and Electromechanical Automation (AIEA 2022) (p. 78). SPIE.

https://doi.org/10.1117/12.2646844

Surahman, E., & Wang,

T. (2022). Academic dishonesty and trustworthy assessment in online learning: A

systematic literature review. Journal of Computer Assisted Learning, 38(6),

1535–1553. https://doi.org/10.1111/jcal.12708

Tlili, A., Shehata, B., Adarkwah,

M. A., Bozkurt, A., Hickey, D. T., Huang, R., & Agyemang, B. (2023). What

if the devil is my guardian angel: ChatGPT as a case study of using chatbots in

education. Smart Learning Environments, 10(1), 15.

https://doi.org/10.1186/s40561-023-00237-x

Villegas-José, V., &

Delgado-García, M. (2024). Inteligencia artificial: Revolución educativa

innovadora en la Educación Superior. Pixel-Bit,

Revista de Medios y Educación, 71, 159-177. https://doi.org/10.12795/pixelbit.107760

Wilson, L., & Marasoiu,

M. (2022). The Development and Use of Chatbots in Public Health:

Scoping Review. JMIR Human Factors, 9(4), e35882.

https://doi.org/10.2196/35882

Winkler, R., & Söllner,

M. (2018). Unleashing the potential of chatbots in education: A

state-of-the-art analysis. Academy of Management Annual Meeting (AOM).

Zambrano, A. (2005). Didáctica, pedagogía y saber:

Aportes desde las ciencias de la educación. Editorial Magisterio.